[Part 1] What I Learn from Working on 3 School Projects At the Same Time

📅 Post Date: 2021-05-28

📆 Edit Date: 2021-10-09

As a Computer Science student, there are always school projects where we need to work with others. However, everyone has their own personal life, heavy school assignments, club activities, internships, part-time jobs, and job hunting events. It is not easy to convince others to work on school projects when they are all seniors and going to graduate soon. Ultimately, you might be the only person who cares about the project and work on the projects yourself. In order to prevent this situation, I decided to create this post.

Hence, in this post, I will bring up what I learn from working on 3 school projects with classmates at the same time. But first I will introduce what the 3 school classes are and what the 3 school projects I participate in. You can skip the technical details and navigate to part 2 because I know it is long and a little too technical…

What these 3 school projects are

I am a senior student studying Computer Science at San Jose State University. It is almost my last semester at San Jose State University. In this semester, I take three CS classes:

- CS 157C NoSQL Database Systems

- CS 158B Computer Network Management

- CS 160 Software Engineering

These are the advanced CS courses where classmates are mostly senior students.

CS 157C NoSQL Database Systems

In NoSQL Database Systems, we learn the fundamentals of NoSQL databases, MongoDB, and Cassandra. In lectures, we learn the theory mostly, such as CAP theorem, replica, sharding, and index; moreover, we have coding assignments to practice MongoDB and Cassandra. Hence, this class is quite substantial, and I learn a lot from the instructor.

Toward the end of the semester, we have roughly four weeks to work on a NoSQL project in a three-people team (including myself).

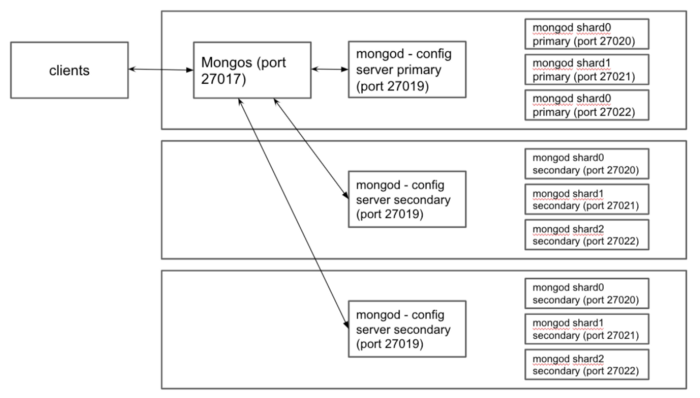

What we make is to implement a cluster of MongoDB that consists of one mongos, three config servers in a replica set, and three shards (each has three replicas as a replica set) across three different AWS EC2 nodes. See the architecture diagram below.

Simply speaking, we need to:

- Download and clean data from a public dataset (we choose Airbnb housing information from MongoDB recommendation)

- Configure MongoDB cluster on three AWS EC2 instances

- Populate the dataset to the cluster

- Create and test at least 15 user-defined functions using PyMongo at the application level

This is a relatively short and easy project, and the most difficult part is configuring the cluster on AWS. You can visit the repository.

CS 158B Computer Network Management

This class is the second class of Computer Network where we focus more on management. In this class, we learn how to interpret different kinds of network packets based on the RFCs and Wireshark, and the protocols we learn include ARP, IP, TCP, UDP, DHCP, DNS, SNMP, TFTP, PXE, and certificate management. Moreover, we learn the theory and some of the usage of VLAN, VPN, NAT, ICMP, network attacks, and site reliability engineering (SRE). This class is also extremely substantial and heavy.

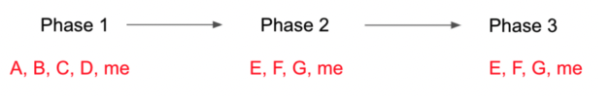

Interestingly, we have a semester-long project, and there are three different phases where we switch to another team when we approach phase 2. Hence, in this class, I get to work with 7 different people (4 in phase 1 and 3 in phase 2 & 3).

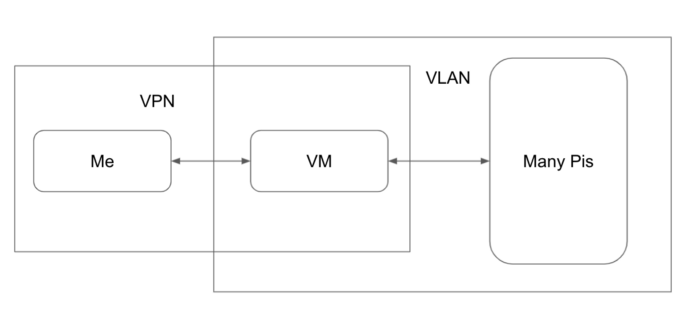

The project is to manage a cluster of 10 raspberry pis (version 3 and 4). This project is mostly configured and set up based on the work from the previous students, and whatever we implement will be passed to the next semester’s students, and eventually, the entire repository will be huge (it is already huge now).

This project is very complicated, but if you stick to it, then, in the end, you will learn a lot about IoT and Linux, specifically the Ubuntu platform. For us to access the raspberry pis, we need to connect to a team VM on campus first where all the pis are connected, and there are several VLANs to separate different teams’ pis by the instructor. And in order to connect to the team VM remotely, we use OpenVPN that is also set up by the instructor.

Phase 1

Then, we need to set up a PXE server to boot up all the pis. Unlike the computers we use, some IoT devices do not have a boot image in flash, so they will request a boot image and all necessary information or files from the network. A PXE server consists of a DHCP server for managing IPs, a TFTP server for sending small files, and a TCP server for sending some commands and large files (root image). After the pis boot up, there will be an ssh service enabled in each pi, so we can access the pi from the VM. To access pis that do not have ssh initially, we use SNMP to reinstall and restart them.

Phase 2

In this phase, we need to monitor some basic metrics on the pis, such as CPU load, memory usage, temperature, and so on. We set up a monitoring server on each pi, and as a client, the VM will request data from all the pis and store them as logs. And then, we create a Grafana dashboard on the VM to visualize the collected metrics. At the end of this phase, the instructor let us experience how an “on-call” taste like. We were told that there would be a shortage, and we needed to figure out the root cause as soon as possible along with a postmortem report. So, we set up some pagers and alerts on discord. And when that happened, it was 2 am in my time zone… After we went through all the checking manually, such as DHCP, TFTP, and iptables, we finally found that IP route was messed up. What an interesting experience.

Phase 3

The last phase. We replace the monitoring mechanism from the previous phase with Prometheus and node exporters. There is one node exporter service on each pi serving as a monitoring server. And Prometheus is the data source as a monitoring client.

In this phase, we actually implement a local DNS server that uses SRV records to redirect the services’ locations (all node exporters on 10 pis). So, we only need one domain name that represents 10 different services (IP + port). Due to the fact that Prometheus supports resolving DNS SRV, we are able to do this easily by just modifying the prometheus.yaml.

As I say, this class is very difficult, and I really need to get my hands dirty.

CS 160 Software Engineering

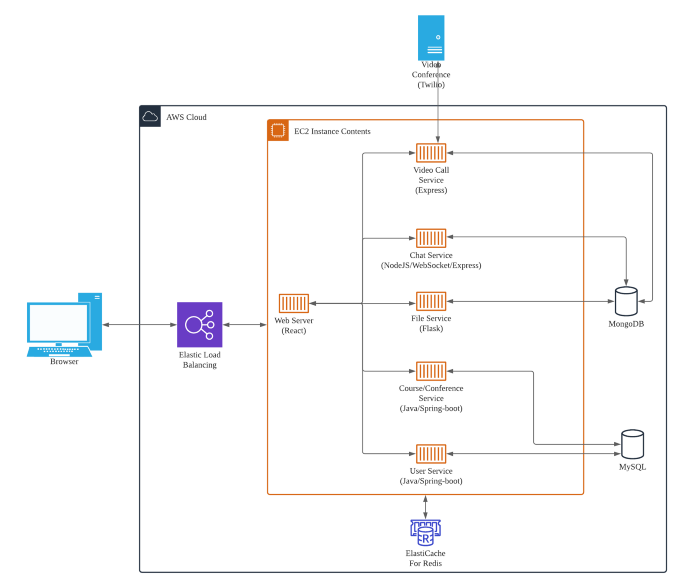

This is a project-based class. I work on a semester-long project with the other three people. In this project, we simulate an agile team to develop a web-based application by defining requirements, designing, implementing, and testing.

In this project, We not only separate the frontend and backend but also develop the project using a micro-service architecture. And at that moment, there are 7 different services. Because everyone has different skillsets and levels, we use a little too many languages and web frameworks just for a school project. The final implementation looks a little different from the diagram below, but essentially, I will say 80% similar. This project is an educational platform that integrates the functions of Canvas and Zoom and basically supports four main features:

- Real-time chat

- Real-time video conference

- File sharing

- Course information dashboard

The technology includes:

- Frontend: ReactJS

- Backend: ExpressJS, NodeJS, Socket, Flask, Spring-boot

- Database: MongoDB, MySQL, Redis

- DevOps and cloud: AWS, Docker

Ironically, the project could be designed very small, for example, we can just use a web framework that puts everything together and implement one feature, such as a to-do list. But maybe I am crazy.